SARA seeks to give artificial intelligence people skills

SARA is a Socially-Aware Robot Assistant that interacts with people in a whole new way, focusing on social bonds. This supercool virtual helper can understand not only what you say, but the exact way you say it, analyzing nonverbal signals and emotions.

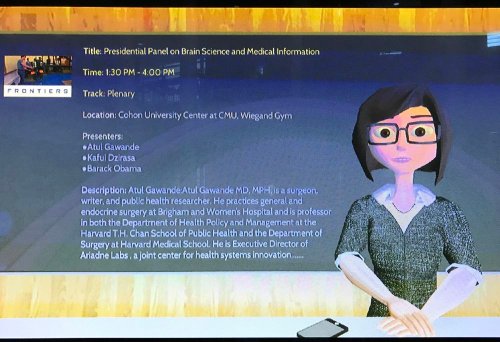

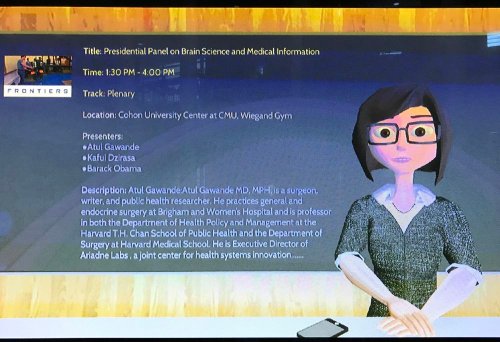

"SARA is not a physical robot made out of metal, but a kind of a virtual human projected on a screen. She relies on some very sophisticated AI's algorithms to recognize the kinds of talk you use to increase the relationship or to demonstrate that you are not very close to someone else," said Justine Cassell, Carnegie Mellon University professor and director of the SARA project

This robot can literally understand your mood: "She recognizes nonverbal cues: eye gaze, head nods and smiles. She even recognizes your vocal cues, the intonation and the way you talk."

Smart SARA then puts all of those together to understand not only the meaning of your words but also the way you submit the information, the professor said. The AI understands the closeness you feel towards her, which helps her to obtain information from you, to think about what to say next and to do a better job at looking things up for you on the Internet, for example.

SARA's creators put together quite a number of different AI branches in this particular project: "We are working at the same time on natural language, on computer graphics and on automatic planning," the director explained. "All these branches of AI come together with the goal quite opposite to the killer-robots we hear so much about. These are robots that intend to make society stronger by focusing on the social bond that we care so much about," she continued.

Justine Cassell has worked on building virtual humans, graphical characters on the screen with an underline AI for quite a long time.

"I always built them in the same way which was to watch people collaborating with each other and then analyze those human-human collaborations as the basis for the human-virtual human collaborations," the expert said. Then she started to realize that not all of the talk between people was about the task they were doing: "Even if they were supposedly collaborating on something quite serious, they've spent a fair amount of time engaging in small talk, praising each other, teasing each other and releasing personal details. And I wondered if that was just for fun or if it played a role."

After a long analysis of this type of human conversation, Justine came to realize that it could be used to determine how people use their voices, their nonverbal cues, the way they talk to someone else by the nature of the task they were working on and where they were in that task.

For the moment, it is a face-to-face project: you have to come into a room and see a life-size virtual human projected on the wall. As soon as Cassell's team refines the project, they might put it on classroom computers or build it into a browser so that it could be used over the Internet.

According to Prof. Cassell, none of all the tutoring systems that are being put in classrooms today have that social awareness that might improve the students' ability to learn.

MTCHT

ICT

TECHNOLOGICAL INNOVATIONS

POST

ABOUT US

NEWS

INTERESTING

INTERVIEW

ANALYSIS

ONLAIN LESSONS