Date:17/03/18

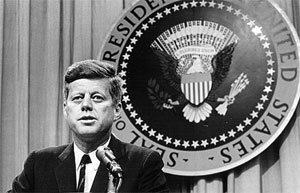

It’s been over five decades since John F Kennedy was tragically assassinated, yet technology is allowing him to deliver his final speech today.

It’s been over five decades since John F Kennedy was tragically assassinated, yet technology is allowing him to deliver his final speech today.

Researchers from CereProc have joined forces with The Times to recreate Kennedy’s voice, piecing together the speech he was never able to make in Dallas on November 22, 1963.

Kennedy was on his way to a lunch at the Dallas Trade Mart when he was killed at the age of 46.

While Kennedy was never able to deliver his speech, the text was preserved, and was used by the researchers.

To recreate Kennedy’s voice, the researchers analysed recordings from 831 of his speeches and radio addresses.

This allowed them to capture 116,777 unique sound clips.

Each clip was then split in half to create a 0.4 second-long ‘phone.’

Speaking to The Times, Chris Pidcock, chief voice engineer at CereProc, said: “There are only 40/45 phones in English so once you’ve got that set you can generate any word in the English language.

“The problem is that it would not sound natural because one sound merges into the sound next to it so they’re not really independent.

“You really need the sounds in the context of every other sound and that makes the database big."

Overall, the piecing-together process took over two months of work, but the recording is fairly convincing.

CereProc now hopes to use the same system to help people who are losing their voice to create realistic digital-versions.

Scientists use computer technology to recreate John F Kennedy’s voice and piece together his final speech

It’s been over five decades since John F Kennedy was tragically assassinated, yet technology is allowing him to deliver his final speech today.

It’s been over five decades since John F Kennedy was tragically assassinated, yet technology is allowing him to deliver his final speech today.Researchers from CereProc have joined forces with The Times to recreate Kennedy’s voice, piecing together the speech he was never able to make in Dallas on November 22, 1963.

Kennedy was on his way to a lunch at the Dallas Trade Mart when he was killed at the age of 46.

While Kennedy was never able to deliver his speech, the text was preserved, and was used by the researchers.

To recreate Kennedy’s voice, the researchers analysed recordings from 831 of his speeches and radio addresses.

This allowed them to capture 116,777 unique sound clips.

Each clip was then split in half to create a 0.4 second-long ‘phone.’

Speaking to The Times, Chris Pidcock, chief voice engineer at CereProc, said: “There are only 40/45 phones in English so once you’ve got that set you can generate any word in the English language.

“The problem is that it would not sound natural because one sound merges into the sound next to it so they’re not really independent.

“You really need the sounds in the context of every other sound and that makes the database big."

Overall, the piecing-together process took over two months of work, but the recording is fairly convincing.

CereProc now hopes to use the same system to help people who are losing their voice to create realistic digital-versions.

Views: 401

©ictnews.az. All rights reserved.Similar news

- Justin Timberlake takes stake in Facebook rival MySpace

- Wills and Kate to promote UK tech sector at Hollywood debate

- 35% of American Adults Own a Smartphone

- How does Azerbaijan use plastic cards?

- Imperial College London given £5.9m grant to research smart cities

- Search and Email Still the Most Popular Online Activities

- Nokia to ship Windows Phone in time for holiday sales

- Internet 'may be changing brains'

- Would-be iPhone buyers still face weeks-long waits

- Under pressure, China company scraps Steve Jobs doll

- Jobs was told anti-poaching idea "likely illegal"

- Angelic "Steve Jobs" loves Android in Taiwan TV ad

- Kinect for Windows gesture sensor launched by Microsoft

- Kindle-wielding Amazon dips toes into physical world

- Video game sales fall ahead of PlayStation Vita launch