Date:04/05/16

Wearing a blindfold and noise-canceling headphones, Igor Spetic gropes for the bowl in front of him, reaches into it, and picks up a cherry by its stem. He uses his left hand, which is his own flesh and blood. His right hand, though, is a plastic and metal prosthetic, the consequence of an industrial accident. Spetic is a volunteer in our research at the Louis Stokes Cleveland Veterans Affairs Medical Center, and he has been using this "myoelectric" device for years, controlling it by flexing the muscles in his right arm. The prosthetic, typical of those used by amputees, provides only crude control. As we watch, Spetic grabs the cherry between his prosthetic thumb and forefinger so that he can pull off the stem. Instead, the fruit bursts between his fingers.

Wearing a blindfold and noise-canceling headphones, Igor Spetic gropes for the bowl in front of him, reaches into it, and picks up a cherry by its stem. He uses his left hand, which is his own flesh and blood. His right hand, though, is a plastic and metal prosthetic, the consequence of an industrial accident. Spetic is a volunteer in our research at the Louis Stokes Cleveland Veterans Affairs Medical Center, and he has been using this "myoelectric" device for years, controlling it by flexing the muscles in his right arm. The prosthetic, typical of those used by amputees, provides only crude control. As we watch, Spetic grabs the cherry between his prosthetic thumb and forefinger so that he can pull off the stem. Instead, the fruit bursts between his fingers.

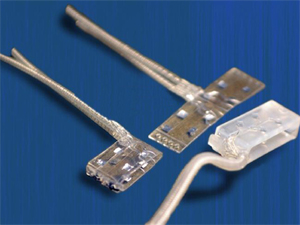

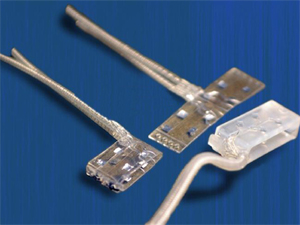

Next, my colleagues and I turn on the haptic system that we and our partners have been developing at the Functional Neural Interface Lab at Case Western Reserve University, also in Cleveland. Previously, surgeons J. Robert Anderson and Michael Keith had implanted electrodes in Spetic’s right forearm, which now make contact with three nerves at 20 locations. Stimulating different nerve fibers produces realistic sensations that Spetic perceives as coming from his missing hand: When we stimulate one spot, he feels a touch on his right palm; another spot produces sensation in his thumb, and so on.

To test whether such sensations would give Spetic better control over his prosthetic hand, we put thin-film force sensors in the device’s index and middle fingers and thumb, and we use the signals from those sensors to trigger the corresponding nerve stimulation. Again we watch as Spetic grasps another cherry. This time, his touch is delicate as he pulls off the stem without damaging the fruit in the slightest.

In our trials, he’s able to perform this task 93 percent of the time when the haptic system is turned on, versus just 43 percent with the haptics turned off. What’s more, Spetic reports feeling as though he is grabbing the cherry, not just using a tool to grab it. As soon as we turn the stimulation on, he says, "It is my hand."

Eventually, we hope to engineer a prosthesis that is just as capable as the hand that was lost. Our more immediate goal is to get so close that Spetic might forget, even momentarily, that he has lost a hand. Right now, our haptic system is rudimentary and can be used only in the lab: Spetic still has wires sticking out of his arm that connect to our computer during the trials, allowing us to control the stimulation patterns. Nevertheless, this is the first time a person without a hand has been able to feel a variety of realistic sensations for more than a few weeks in the missing limb. We’re now working toward a fully implantable system, which we hope to have ready for clinical trials within five years.

Building a sophisticated neural stimulation device that actually works outside the laboratory won’t be easy. The prosthesis will need to continuously monitor hundreds of tactile and position sensors on the prosthesis and feed that information back to the implanted stimulator, which then must translate that data into a neural code to be applied to the nerves in the arm. At the same time, our system will determine the user’s intent to move the prosthesis by recording the activity of up to 16 muscles in the residual limb. This information will be decoded, wirelessly transmitted out of the body, and converted to motor-drive commands, which will move the prosthesis. In total, the system will have 96 stimulation channels and 16 recording channels that will need to be coordinated to create motion and feeling. And all of this activity must be carried out with minimal time delays.

As we refine our system, we’re trying to find the optimal number of contacts. If we use three flattened electrode cuffs that each have 32 contacts, for example, we could hypothetically provide sensation at 96 points across the hand. So how many channels does a user need to have excellent function and sensation? And how is information across these channels coordinated and interpreted?

To make a self-contained device that doesn’t rely on an external computer, we’ll need miniature processors that can be inserted into the prosthesis to communicate with the implant and send stimulation to the electrode cuffs. The implanted electronics must be robust enough to last years inside the human body and must be powered internally, with no wires sticking out of the skin. We’ll also need to work out the communication protocol between the prosthesis and the implanted processor.

Such scenarios could become reality within the next decade. Sensation tells us what is and isn’t part of us. By extending sensation to our machines, we will expand humanity’s reach—even if that reach is as simple as holding a loved one’s hand.

Creating a prosthetic hand that can feel

Wearing a blindfold and noise-canceling headphones, Igor Spetic gropes for the bowl in front of him, reaches into it, and picks up a cherry by its stem. He uses his left hand, which is his own flesh and blood. His right hand, though, is a plastic and metal prosthetic, the consequence of an industrial accident. Spetic is a volunteer in our research at the Louis Stokes Cleveland Veterans Affairs Medical Center, and he has been using this "myoelectric" device for years, controlling it by flexing the muscles in his right arm. The prosthetic, typical of those used by amputees, provides only crude control. As we watch, Spetic grabs the cherry between his prosthetic thumb and forefinger so that he can pull off the stem. Instead, the fruit bursts between his fingers.

Wearing a blindfold and noise-canceling headphones, Igor Spetic gropes for the bowl in front of him, reaches into it, and picks up a cherry by its stem. He uses his left hand, which is his own flesh and blood. His right hand, though, is a plastic and metal prosthetic, the consequence of an industrial accident. Spetic is a volunteer in our research at the Louis Stokes Cleveland Veterans Affairs Medical Center, and he has been using this "myoelectric" device for years, controlling it by flexing the muscles in his right arm. The prosthetic, typical of those used by amputees, provides only crude control. As we watch, Spetic grabs the cherry between his prosthetic thumb and forefinger so that he can pull off the stem. Instead, the fruit bursts between his fingers.Next, my colleagues and I turn on the haptic system that we and our partners have been developing at the Functional Neural Interface Lab at Case Western Reserve University, also in Cleveland. Previously, surgeons J. Robert Anderson and Michael Keith had implanted electrodes in Spetic’s right forearm, which now make contact with three nerves at 20 locations. Stimulating different nerve fibers produces realistic sensations that Spetic perceives as coming from his missing hand: When we stimulate one spot, he feels a touch on his right palm; another spot produces sensation in his thumb, and so on.

To test whether such sensations would give Spetic better control over his prosthetic hand, we put thin-film force sensors in the device’s index and middle fingers and thumb, and we use the signals from those sensors to trigger the corresponding nerve stimulation. Again we watch as Spetic grasps another cherry. This time, his touch is delicate as he pulls off the stem without damaging the fruit in the slightest.

In our trials, he’s able to perform this task 93 percent of the time when the haptic system is turned on, versus just 43 percent with the haptics turned off. What’s more, Spetic reports feeling as though he is grabbing the cherry, not just using a tool to grab it. As soon as we turn the stimulation on, he says, "It is my hand."

Eventually, we hope to engineer a prosthesis that is just as capable as the hand that was lost. Our more immediate goal is to get so close that Spetic might forget, even momentarily, that he has lost a hand. Right now, our haptic system is rudimentary and can be used only in the lab: Spetic still has wires sticking out of his arm that connect to our computer during the trials, allowing us to control the stimulation patterns. Nevertheless, this is the first time a person without a hand has been able to feel a variety of realistic sensations for more than a few weeks in the missing limb. We’re now working toward a fully implantable system, which we hope to have ready for clinical trials within five years.

Building a sophisticated neural stimulation device that actually works outside the laboratory won’t be easy. The prosthesis will need to continuously monitor hundreds of tactile and position sensors on the prosthesis and feed that information back to the implanted stimulator, which then must translate that data into a neural code to be applied to the nerves in the arm. At the same time, our system will determine the user’s intent to move the prosthesis by recording the activity of up to 16 muscles in the residual limb. This information will be decoded, wirelessly transmitted out of the body, and converted to motor-drive commands, which will move the prosthesis. In total, the system will have 96 stimulation channels and 16 recording channels that will need to be coordinated to create motion and feeling. And all of this activity must be carried out with minimal time delays.

As we refine our system, we’re trying to find the optimal number of contacts. If we use three flattened electrode cuffs that each have 32 contacts, for example, we could hypothetically provide sensation at 96 points across the hand. So how many channels does a user need to have excellent function and sensation? And how is information across these channels coordinated and interpreted?

To make a self-contained device that doesn’t rely on an external computer, we’ll need miniature processors that can be inserted into the prosthesis to communicate with the implant and send stimulation to the electrode cuffs. The implanted electronics must be robust enough to last years inside the human body and must be powered internally, with no wires sticking out of the skin. We’ll also need to work out the communication protocol between the prosthesis and the implanted processor.

Such scenarios could become reality within the next decade. Sensation tells us what is and isn’t part of us. By extending sensation to our machines, we will expand humanity’s reach—even if that reach is as simple as holding a loved one’s hand.

Views: 631

©ictnews.az. All rights reserved.Similar news

- The mobile sector continues its lead

- Facebook counted 600 million active users

- Cell phone testing laboratory is planned to be built in Azerbaijan

- Tablets and riders outfitted quickly with 3G/4G modems

- The number of digital TV channels will double to 24 units

- Tax proposal in China gets massive online feedback

- Malaysia to implement biometric system at all entry points

- Korea to build Green Technology Centre

- Cisco Poised to Help China Keep an Eye on Its Citizens

- 3G speed in Azerbaijan is higher than in UK

- Government of Canada Announces Investment in Green Innovation for Canada

- Electric cars in Azerbaijan

- Dominican Republic Govt Issues Cashless Benefits

- Spain raises €1.65bn from spectrum auction

- Camden Council boosts mobile security